Unpacking The O4-mini Context Window: What It Means For AI Conversations Today

Have you ever found yourself chatting with an AI, only for it to completely forget what you just talked about? It can be a bit frustrating, can't it? Well, the way an AI "remembers" things, you know, like our back-and-forth, is often tied to something called its "context window." This is a pretty big deal for how helpful and useful these models can be, especially when we're looking for something that feels more like a real conversation.

For anyone who works with AI or is just curious about how these smart systems tick, understanding the o4-mini context window is, like, pretty important. It's one of those core pieces that shapes how well a model performs, how quickly it gives you answers, and even how long a chat can go on before things start getting muddled. We are, you know, always trying to make these digital brains better at keeping up with us.

This particular aspect of AI models, the o4-mini context window, is gaining a lot of attention these days. People are really looking at how these models can handle more complex requests and longer discussions without losing their way. It's a key part of making AI feel more natural, more capable, and, in a way, more human in its interactions, so it's a topic worth exploring.

Table of Contents

- Understanding the AI Context Window: Your AI's Short-Term Memory

- o4-mini: A Closer Look at Its Context Abilities

- o4-mini vs. o3-mini: What's the Difference?

- The Impact of a 200k Token Window for Users

- In-Context Learning: How o4-mini Uses Your Prompts

- Snapshot Consistency in o4-mini

- Limitations and What They Mean

- Frequently Asked Questions About AI Context Windows

- Looking Ahead: The Future of AI Memory

Understanding the AI Context Window: Your AI's Short-Term Memory

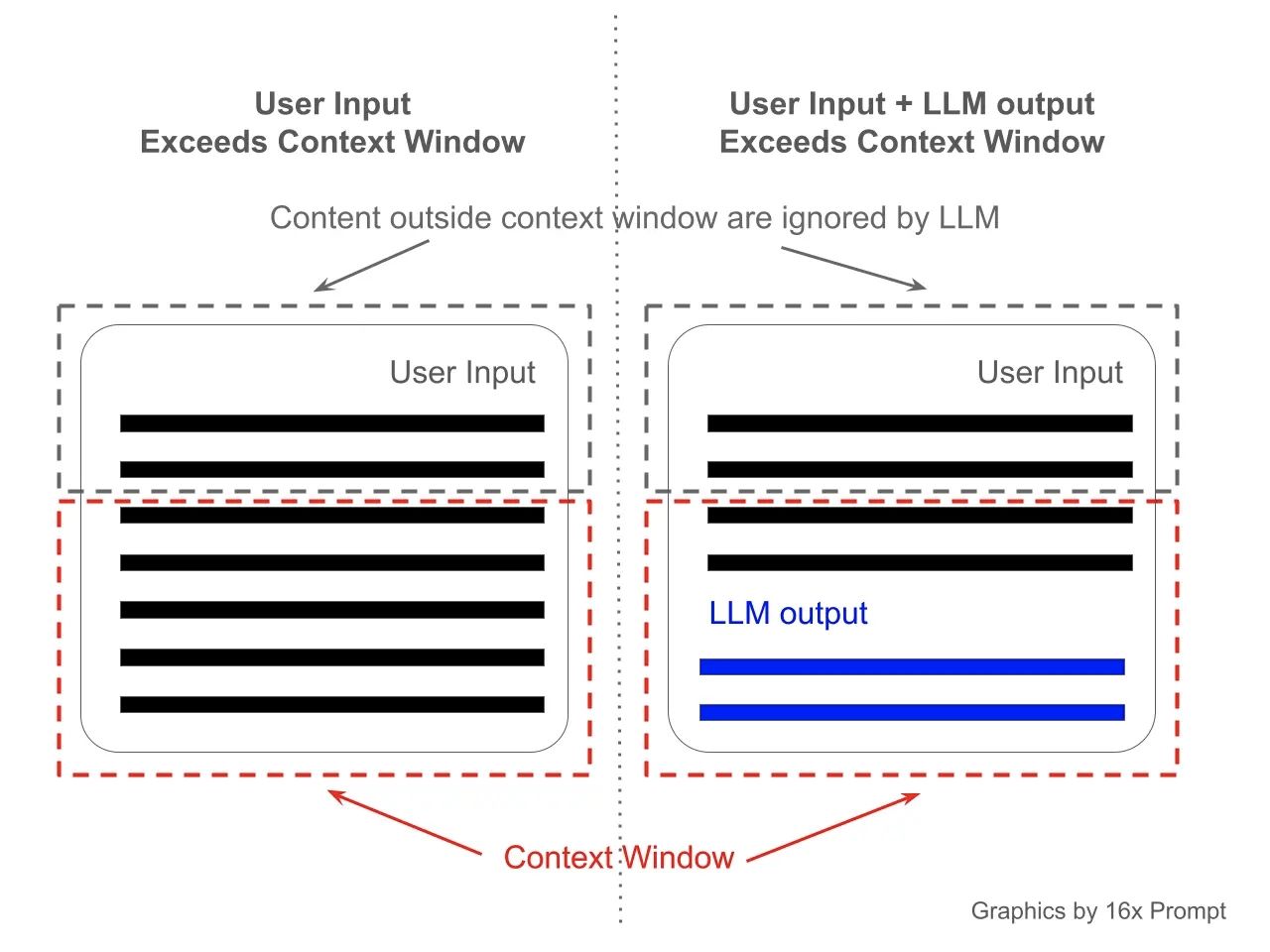

When we talk about an AI's "context window," we're really talking about how much information it can hold in its immediate "mind" at any given moment. Think of it, you know, like a very focused short-term memory. Every word you give the AI, every question, and every bit of its own response, counts towards this window. It's basically the conversation history the AI can actively consider when generating its next reply.

A bigger context window means the AI can remember more of your conversation, allowing for longer, more involved discussions. This is pretty important for tasks where the AI needs to keep track of many details over time. Without a good context window, an AI might, say, forget a detail you mentioned just a few sentences ago, which can make the interaction feel a bit disjointed, you know.

For example, if you're asking an AI to help you plan a trip, and you keep adding details like "I also need a car rental" or "make sure it's pet-friendly," a larger context window helps the AI remember all those specific requirements as the conversation goes on. It's really about maintaining coherence and relevance throughout your interaction, which is, honestly, what we all want from these systems.

- Georgetown Tigers Football

- Ted Hughes Underwater Eyes

- Mashable Connections Today

- Fitbit Charge 6

- Steph Oshiri Anal

o4-mini: A Closer Look at Its Context Abilities

The o4-mini model, you know, has been getting some buzz lately. It's designed to give you faster responses compared to its predecessor, o3-mini. What's more, it comes with a larger context window, which is a pretty big step forward for keeping conversations flowing smoothly. This means it can, you know, hold onto more of your chat history, making it feel more connected.

According to some information, o4-mini can actually deliver faster responses than o3-mini. This is a huge plus for anyone who uses AI for quick tasks or needs immediate feedback. The speed, you know, combined with its improved memory, makes it a rather capable tool for many different applications where time is of the essence.

This model also shows comparable accuracy to o3, especially when you use higher reasoning settings. So, it's not just faster; it's also maintaining a good level of precision in its answers. This balance of speed, a larger context, and good accuracy is, you know, a pretty compelling combination for a modern AI system.

o4-mini vs. o3-mini: What's the Difference?

When you look at o4-mini and o3-mini side-by-side, one of the first things you notice is the context window. Both of these models, you know, feature a context window of 200,000 tokens. This might sound like a lot, and in many ways, it is, allowing for quite extensive conversations and detailed inputs.

However, it's also worth noting that this 200,000-token window is, you know, significantly smaller than what some other AI competitors offer. Some models out there boast context windows that can reach up to a million tokens. This difference in size can, in a way, impact how the model handles extremely long documents or very drawn-out discussions.

Despite this, the o4-mini still manages to deliver faster responses and maintains a good level of accuracy, as we've talked about. So, while its context window isn't the absolute largest on the market, its performance metrics like speed and accuracy make it a really strong contender for many everyday uses. It's about balancing, you know, memory size with practical efficiency.

The Impact of a 200k Token Window for Users

A 200,000-token context window is, for many practical purposes, quite generous. It means that the o4-mini can, you know, handle long prompts and extended conversations without losing its way too easily. This is particularly helpful for tasks that require the AI to remember many specific details you've provided over time.

For example, if you're asking the AI to summarize a lengthy document or to help you draft a complex report, this larger window lets you provide all the necessary background information in one go. The model can then process all that text and, you know, give you a more relevant and accurate output. It really helps when you don't have to keep reminding the AI of things.

This capacity to handle long inputs means less frustration for the user. You don't have to break down your requests into tiny pieces, which can, you know, really speed up your workflow. It allows for a more natural and fluid interaction, feeling more like a back-and-forth with someone who actually remembers what you've said, which is a big plus.

In-Context Learning: How o4-mini Uses Your Prompts

One of the fascinating things about how models like o4-mini work is something called "in-context learning." This means that the AI doesn't, you know, rewrite its own core programming every time you chat. Instead, it uses your prompts and the ongoing conversation to learn and adapt its responses on the fly.

Every single prompt you give the model, plus all of your back-and-forth exchanges, become part of this immediate learning experience. It's like the AI is taking notes during your conversation and using those notes to inform its next answer. This is why the context window is so important, as it dictates how many of these "notes" the AI can actively refer to.

This process allows the AI to understand your specific needs and preferences within a single conversation, without needing to be retrained. It makes the interaction feel much more personalized and, you know, responsive to your unique way of speaking or asking questions. It's a pretty clever way for AI to be helpful right when you need it.

Snapshot Consistency in o4-mini

Something else that's really useful with models like o4-mini is the concept of "snapshots." These snapshots let you, you know, lock in a specific version of the model. This means that its performance and behavior remain consistent over time, which is very important for developers and businesses relying on these systems.

Imagine you're building an application that uses an AI model. You want to make sure that the AI behaves predictably and gives consistent results every time someone uses your app. Snapshots help with this by ensuring that the underlying model doesn't, you know, suddenly change its responses or performance characteristics. It provides a stable foundation.

This consistency is, in a way, a big deal for reliability. It means you can trust that the AI will perform as expected, day in and day out, without unexpected variations. It's a feature that really helps in building dependable AI-powered tools, giving users and developers peace of mind, which is, you know, always a good thing.

Limitations and What They Mean

While the o4-mini's 200,000-token context window is quite substantial, it's also true that some competitors offer significantly larger windows, reaching up to a million tokens. This difference, you know, can lead to certain limitations depending on the specific task you're trying to accomplish with the AI.

For extremely long documents, like an entire book or a vast archive of legal texts, a 200,000-token window might mean you have to break the content into smaller chunks. This limitation can, you know, impact the seamless processing of truly massive amounts of information in a single go. It's something to keep in mind for very data-heavy applications.

However, for most day-to-day conversational AI uses, and for many business applications, 200,000 tokens is, you know, more than enough. It's about finding the right tool for the job. While it might not handle every single edge case of extreme length, it performs very well for the vast majority of common tasks, which is, you know, really what matters for most people.

Frequently Asked Questions About AI Context Windows

People often have questions about how these AI models work, especially when it comes to their memory. Here are a few common ones, you know, that might pop up when you're thinking about context windows.

What does "context window" actually mean for an AI?

The context window is, basically, the amount of text an AI model can "see" or "remember" from the current conversation when it's generating its next response. It includes your prompts and the AI's previous replies. A larger window means the AI can, you know, keep track of more of the chat history, making conversations feel more coherent and natural.

Why is a larger context window important for AI models?

A larger context window allows AI models to handle longer, more complex conversations and documents without losing track of important details. It means the AI can, you know, understand the full scope of your request and provide more relevant answers, as it has access to more information from your ongoing interaction. This is pretty key for advanced applications.

How does o4-mini's context window compare to other models?

The o4-mini model features a 200,000-token context window. While this is quite substantial and good for many tasks, some competitor models, you know, offer even larger windows, sometimes up to a million tokens. So, while it's not the biggest, o4-mini balances this with faster responses and good accuracy, which is, you know, a different kind of advantage.

Looking Ahead: The Future of AI Memory

The ongoing development of AI models, especially concerning their context windows, is a really exciting area. As we've seen with o4-mini, there's a constant push to make these models faster, more accurate, and, you know, better at remembering our conversations. The empirical throughput of o4-mini, for example, is around 85.2 tokens per second, which shows a good step in efficiency.

Future advancements will likely focus on even larger context windows, perhaps reaching those million-token capacities more commonly, but also on smarter ways for models to use that context. It's not just about size, you know; it's also about how intelligently the AI can retrieve and apply relevant information from that vast memory. We recently published a paper introducing Zep, a tool that, you know, helps with managing long AI memory.

Ultimately, the goal is to create AI interactions that feel seamless, intuitive, and truly helpful, whether you're asking a quick question or engaging in a deep, multi-turn discussion. The o4-mini context window is a clear step in that direction, showing how much progress is being made in making AI a more natural part of our daily lives. To learn more about AI advancements on our site, you can, you know, check out our other articles. Also, consider exploring how AI models handle long-form content for more insights.

The journey to more capable AI is, in a way, always moving forward. We're always finding new ways to make these systems better at what they do, and the context window is a big part of that. It's really about making the technology serve us better, and that's a goal we can all get behind, don't you think?

- El Tiempo En Kearns

- Inter Miami Vs Toronto

- Amy Muscle Porn

- Chelsea Vs Bournemouth

- First Source Credit Union

What is a Context Window in AI?

Context Window (LLMs) — Klu

What is a Context Window